Last April, to no one in particular, I asked the following question:

"What's the shortest possible trip (in miles) to see every MLB team play at least one game this season?"

It became clear, after a brief discussion with some friends, that the shortest possible trip is somewhere on the order of a hundred miles. Citi Field in the Bronx and Yankee Stadium in Queens are only 6 miles apart. Since the Mets and the Yankees are in different leagues and each team plays one series at home against every other team in its own league, you could just spend the whole season going back and forth between the two stadiums. (In fact, I’d be surprised if at least one New Yorker baseball fan with time and money to burn hadn’t done exactly this.)

In order to avoid this “trivial” solution, a modification to the puzzle would have to be introduced. After throwing around a bunch of attempts, I hit upon the perfect goal: 15 games, 15 stadiums, 30 teams. You’d see no team play more than once, you’d be in no stadium more than once.

Now that I had a problem worth solving, how to solve it? The sheer number of games makes it pretty clear that this is a task that can’t be solved by brute force. There are 2430 games in a regular season of baseball. The possible number of any selection of 15 of those games is 4.45 × 1038. Even if I could check a trillion schedules a second (which I can’t), it would still take 14 billion billion years (at which point, even the Red Sox would probably have a new stadium). If you visualized the problem as a graph, each game would be a node and each travel day would be an edge. The problem was that every game had an edge that led to every game that came after it. What I needed to do first was prune this tree.

Now that I had a problem worth solving, how to solve it? The sheer number of games makes it pretty clear that this is a task that can’t be solved by brute force. There are 2430 games in a regular season of baseball. The possible number of any selection of 15 of those games is 4.45 × 1038. Even if I could check a trillion schedules a second (which I can’t), it would still take 14 billion billion years (at which point, even the Red Sox would probably have a new stadium). If you visualized the problem as a graph, each game would be a node and each travel day would be an edge. The problem was that every game had an edge that led to every game that came after it. What I needed to do first was prune this tree.

So I made some assumptions for the sake of making a reasonable road trip. I wouldn’t want more than 2 days off between games, and I wouldn’t want to travel more than 500 miles on any given day. This change trimmed the 3-million-plus edges into a mere 86,000. But an exhaustive search would still take a prohibitively long time: at that same impossible speed, I’d have my answer in merely 11 billion years. I made the problem more than a billion times smaller, but realistically I wasn’t any closer to the solution.

It was about this time that I decided I was never going to get the perfect answer. I would have to resign myself to a Good Enough plan that could be calculated before the heat death of the universe (or better yet, before Opening Day). I brushed up on my Traveling Salesman Problem and pathfinding algorithms like A*, Dijkstra’s, and Floyd-Warshall. But my problem had a couple of quirks that made those approaches unsuitable:

- With both A* and Dijkstra's, you have defined starting and destination nodes. I did not want to specify either. I wanted the schedule (and the map) to dictate the best time of the season to take the trip.

- Pretty much every algorithm I read about optimizes for total distance, and nothing else. A perfect road trip might start at Wrigley and end at US Cellular a month later, but it most definitely would not be the shortest distance between those two points.

- Most importantly, I was very particular about exactly which nodes could be on the same schedule. Two games might not have an overlap, but once I decided to go to both of them, the options for the third game get narrowed down. Likewise for every step along the way.

After three or four false starts (and one moment where a bug in my data structure made an exhaustive search running a couple quadrillion times faster than it should), I happened upon a promising technique. A commenter on Stack Overflow recommended (in response to a purposely vague question) doing a sort of prioritized breadth-first search. What I ended up doing was starting with a list of all of the one-game plans (2430 plan, one for each game). And then I did this:

After three or four false starts (and one moment where a bug in my data structure made an exhaustive search running a couple quadrillion times faster than it should), I happened upon a promising technique. A commenter on Stack Overflow recommended (in response to a purposely vague question) doing a sort of prioritized breadth-first search. What I ended up doing was starting with a list of all of the one-game plans (2430 plan, one for each game). And then I did this:

- Remove the plan with the shortest average leg length from the list (ties are unimportant -- pick arbitrarily)

- Look at every game that you could add to the end of that plan that meets the restrictions: 2 or fewer off days, no more than 500 miles of travel per day.

- For every one of those games, check if it's legal to add to this plan (check for team duplications against every game already on the plan)

- If there are no duplicates, add it to the list of plans. Since each game has a couple dozen possible "next" games, this will likely result in the number of plans in the list growing.

- If there are more than some number of plans in the list, discard the longest ones. (After some experimentation, I went with about half a million. Much more on this another time.)

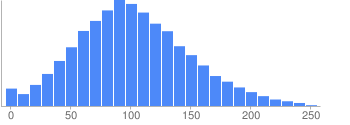

And I repeated until the “shortest plan” was 15 games long. On the first try, it took about 3 and a half hours on my laptop (Core i5 with 4GB of RAM) and had to inspect more than 12.5 million potential plans before finding one that was a full 15 games long. The solution was almost 5,500 miles long, and included back to back 800-mile legs from Los Angeles to Denver and then to St. Louis. That did not strike me as optimal. I considered what could be preventing the discovery of a good plan: the problem is that the shortest eight-game plan might not yield any short nine-game plans, and if the list is full and discards the longer eight-game plans before they can even be checked, then it will never pursue the most promising leads. In truth, I run four lists in parallel, and they fit 219-1 elements each (a little over 500k). But the lists are full after only three minutes of inspecting plans (less than half a million). These lists take up more than 2GB of memory right now.

I chose to try the script on an Extra Large Amazon Elastic Compute Cloud instance. This includes not quite as much CPU power as my laptop, but four times the memory. I also changed the way the algorithm treated “the shortest average leg length”, by giving plans with more legs a bonus. It found this schedule in less than ten minutes, and failed to find a better one even after searching through 20+ million more. (This is a thousand miles shorter than the one I found earlier, and the only really long leg is from Boston to Minneapolis.)

2012-07-18: Blue Jays @ New York Yankees

- Travel 6.5 mi

2012-07-20: Dodgers @ New York Mets

- Travel 91.2 mi

2012-07-21: Giants @ Philadelphia Phillies

- Travel 121.6 mi

2012-07-22: Braves @ Washington (DC) Nationals

- Travel 306.6 mi

2012-07-24: Tigers @ Cleveland Indians

- Travel 309.9 mi

2012-07-27: Cardinals @ Chicago Cubs

- Travel 76.4 mi

2012-07-30: Astros @ Milwaukee Brewers

- Travel 326.4 mi

2012-07-31: Padres @ Cincinnati Reds

- Travel 250.6 mi

2012-08-03: Angels @ Chicago White Sox

- Travel 408.8 mi

2012-08-06: Diamondbacks @ Pittsburgh Pirates

- Travel 196.9 mi

2012-08-07: Mariners @ Baltimore Orioles

- Travel 358.4 mi

2012-08-08: Rangers @ Boston Red Sox

- Travel 1128 mi

2012-08-11: Rays @ (Minneapolis) Minnesota Twins

- Travel 414.8 mi

2012-08-14: Athletics @ Kansas City (Missouri) Royals

- Travel 564.5 mi

2012-08-16: Marlins @ (Denver) Colorado Rockies

Total distance: 4560.5 mi

Remember, this isn’t the best you could do, but it’s probably close, and it was computable in a very reasonable amount of time. Interestingly, the shortest 13-game road trip is about half as long (about 2400 miles, with no leg longer than 350). Picking up those last couple of games is quite expensive. It’s been suggested that seeing a team more than once for the sake of saving several hundred miles might be acceptable – but establishing an algorithmic rule might take some time. Stay tuned for further tweaks!

Now: time to rent a car and block off a month of vacation.

Here is the final version of the code that I used for this post.

Now that I had a problem worth solving, how to solve it? The sheer number of games makes it pretty clear that this is a task that can’t be solved by brute force. There are 2430 games in a regular season of baseball. The possible number of any selection of 15 of those games is 4.45 × 1038. Even if I could check a trillion schedules a second (which I can’t), it would still take 14 billion billion years (at which point, even the Red Sox would probably have a new stadium). If you visualized the problem as a graph, each game would be a node and each travel day would be an edge. The problem was that every game had an edge that led to every game that came after it. What I needed to do first was prune this tree.

Now that I had a problem worth solving, how to solve it? The sheer number of games makes it pretty clear that this is a task that can’t be solved by brute force. There are 2430 games in a regular season of baseball. The possible number of any selection of 15 of those games is 4.45 × 1038. Even if I could check a trillion schedules a second (which I can’t), it would still take 14 billion billion years (at which point, even the Red Sox would probably have a new stadium). If you visualized the problem as a graph, each game would be a node and each travel day would be an edge. The problem was that every game had an edge that led to every game that came after it. What I needed to do first was prune this tree.